VALL-E

Neural Codec Language Models are Zero-Shot Text to Speech Synthesizers

Paper: https://arxiv.org/abs/2301.02111Abstract. We introduce a language modeling approach for text to speech synthesis (TTS). Specifically, we train a neural codec language model (called VALL-E) using discrete codes derived from an off-the-shelf neural audio codec model, and regard TTS as a conditional language modeling task rather than continuous signal regression as in previous work. During the pre-training stage, we scale up the TTS training data to 60K hours of English speech which is hundreds of times larger than existing systems. VALL-E emerges in-context learning capabilities and can be used to synthesize high-quality personalized speech with only a 3-second enrolled recording of an unseen speaker as an acoustic prompt. Experiment results show that VALL-E significantly outperforms the state-of-the-art zero-shot TTS system in terms of speech naturalness and speaker similarity. In addition, we find VALL-E could preserve the speaker's emotion and acoustic environment of the acoustic prompt in synthesis.

official demo page: https://valle-demo.github.io

my implementation: https://github.com/lifeiteng/vall-e

This page is for showing reproduced results only, I keep the main parts of the official demo.

Model Configs

| Item | The Paper | LJSpeech Model | LibriTTS Model |

|---|---|---|---|

| Transformer | Dim 1024 Heads 16 Layers 12 | Dim 256 Heads 8 Layers 6 | Dim 1024 Heads 16 Layers 12 |

| Dataset | LibriLight 60K hours | LJSpeech 20 hours | LibriTTS 0.56K hours |

| Machines | 16 x V100 32GB GPU | 1 x RTX 12GB GPU | 1 x RTX 24GB GPU |

Model Overview

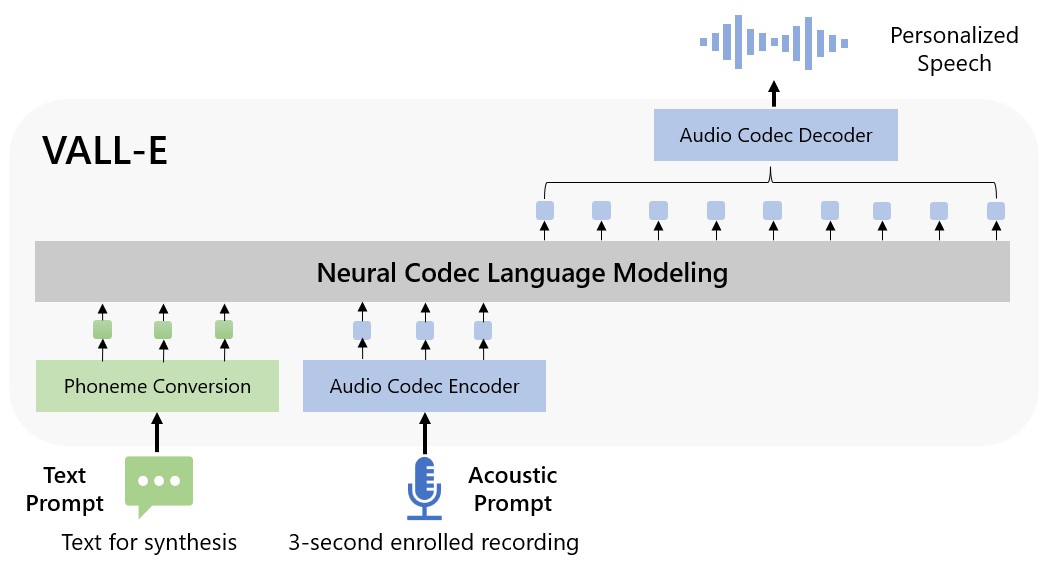

The overview of VALL-E. Unlike the previous pipeline (e.g., phoneme → mel-spectrogram → waveform), the pipeline of VALL-E is phoneme → discrete code → waveform. VALL-E generates the discrete audio codec codes based on phoneme and acoustic code prompts, corresponding to the target content and the speaker's voice. VALL-E directly enables various speech synthesis applications, such as zero-shot TTS, speech editing, and content creation combined with other generative AI models like GPT-3.

LJSpeech Samples

| Text | Speaker Prompt | Ground Truth | LJSpeech Model |

|---|---|---|---|

| In addition, the proposed legislation will insure. | |||

| During the period the Commission was giving thought to this situation, |

LibriSpeech Samples

| Text | Speaker Prompt | Ground Truth | VALL-E | LibriTTS Model |

|---|---|---|---|---|

| They moved thereafter cautiously about the hut groping before and about them to find something to show that Warrenton had fulfilled his mission. | ||||

| And lay me down in thy cold bed and leave my shining lot. | ||||

| Number ten, fresh nelly is waiting on you, good night husband. | ||||

| Yea, his honourable worship is within, but he hath a godly minister or two with him, and likewise a leech. |

Acoustic Environment Maintenance

VALL-E can synthesize personalized speech while maintaining the acoustic environment of the speaker prompt. The audio and transcriptions are sampled from the Fisher dataset.

| Text | Speaker Prompt | Ground Truth | VALL-E | LibriTTS Model |

|---|---|---|---|---|

| I think it's like you know um more convenient too. | ||||

| Um we have to pay have this security fee just in case she would damage something but um. | ||||

| Everything is run by computer but you got to know how to think before you can do a computer. | ||||

| As friends thing I definitely I've got more male friends. |

Speaker’s Emotion Maintenance

VALL-E can synthesize personalized speech while maintaining the emotion in the speaker prompt. The audio prompts are sampled from the Emotional Voices Database.

| Text | Emotion | Speaker Prompt | VALL-E | LibriTTS Model |

|---|---|---|---|---|

| We have to reduce the number of plastic bags. | Anger | |||

| Sleepy | ||||

| Neutral | ||||

| Amused | ||||

| Disgusted |

Ethics Statement

To avoid abuse, Well-trained models and services will not be provided.